Having given up on Flash, we're looking to Unity for our game-writing needs, and that includes playing video with a transparent background. Given that it's 2015, surely Unity is capable of playing videos with a transparent background?

Well, it turns out not. Or at least, not easily.

Unity uses some variant of the Ogg Vorbis format for video (the Theora codec apparently) and, bizarrely, Quicktime to handle importing videos into the editor. And since the Theora codec doesn't support video alpha transparency, for a while it looked like we'd hit yet another brick wall. For a while at least.

A quick check on the Unity Asset store reveals a number of custom shaders and scripts that can apply chroma keying at runtime. So instead of using a video with an alpha channel, we should be able to simply use a video with a flat, matte green background, and key out the green on-the-fly.

One such off-the-shelf plugin is the Universal Chroma Key shader. But it doesn't work with Unity 5 (and we're still waiting for our refund, Rodrigo!). One that does work is the slightly more expensive UChroma Key by CatsPawsGames. It's easy enough to set up and use, and gives a half-decent result. It's good.

But not great.

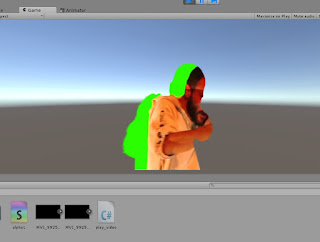

After removing the green background, we're still left with a green "halo" around our main character - which is particularly noticeable when the video is actually playing. Some frames suffer more than others, but the result is a discernible green haze around our hero character. The only way to remove the green completely is to make the keying so "aggressive" that part of the main character starts to disappear too. And, obviously, this is no good for what we're after!

We spent quite a bit on different plug-ins from the online store, but UChroma gave the best results. And even that wasn't quite good enough for what we're after.

Just as we were about to give up on Unity, Scropp (Stephen Cropp) came up with a crazy idea - write our own shader. The reason it was so crazy? Well, some of us couldn't tell a texture from a material, let alone even understand what a shader is or does. Luckily, Stephen had a basic idea of how it all worked. And there were plenty of examples online about creating your own custom shader.

Our thinking was to create a shader which would accept not one, but two, material/textures. The first would be the footage we wanted to play, and the second would be a second movie texture, containing just the alpha data for the first movie. It seemed like it might be worth investigating.

We know that Unity doesn't support videos with alpha channels, but the idea of splitting it over two layers - one containing the footage, and one containing the alpha mask as a greyscale sequence seemed like a sensible one. So that's exactly what we did!

After creating our video footage with a green background and just the RGB (no alpha) channels -

- we exported the same footage from After Effects, only this time, exporting only the alpha channel (to a video format that also only supported RGB, no alpha)

Now it was simply a case of applying our footage video to one plane/quad and the alpha movie to another. Then simply play both movies together, and write a shader which took the alpha from one movie and applied it to the other. So far, so easy. One thing to look out for is that a movie texture doesn't automatically begin playing after being applied to a plane/quad. You need a simple script to kick it into life.

We simply created a "play video" script and dropped it onto each of our video containers. When we hit the "play" button in Unity, both videos started playing automatically:

using UnityEngine;

using System.Collections;

[RequireComponent (typeof(AudioSource))]

public class play_video : MonoBehaviour {

// Use this for initialization

void Start () {

MovieTexture movie = GetComponent<Renderer>().material.mainTexture as MovieTexture;

movie.loop = true;

GetComponent<AudioSource>().clip = movie.audioClip;

GetComponent<AudioSource>().Play ();

movie.Play ();

}

// Update is called once per frame

void Update () {

}

}

using System.Collections;

[RequireComponent (typeof(AudioSource))]

public class play_video : MonoBehaviour {

// Use this for initialization

void Start () {

MovieTexture movie = GetComponent<Renderer>().material.mainTexture as MovieTexture;

movie.loop = true;

GetComponent<AudioSource>().clip = movie.audioClip;

GetComponent<AudioSource>().Play ();

movie.Play ();

}

// Update is called once per frame

void Update () {

}

}

The video player script should be pretty self-explanitory: simply get the audio and movietexture elements of the object that this script is placed on, and set them playing. It's that easy! The only stumbling block now is the "write your own custom shader" idea.

Let's start with the simplest of shaders - an unlit, video player shader.

Even if you don't full understand the "shader language" (as we still don't) it is possible to vaguely follow what's going on in the shader, with a bit of guesswork:

Shader "Custom/alpha1" {

Properties{

_MainTex("Color (RGB)", 2D) = "white"

}

SubShader{

Tags{ "Queue" = "Transparent" "RenderType" = "Transparent" }

CGPROGRAM

#pragma surface surf NoLighting alpha

fixed4 LightingNoLighting(SurfaceOutput s, fixed3 lightDir, fixed atten) {

fixed4 c;

c.rgb = s.Albedo;

c.a = s.Alpha;

return c;

}

struct Input {

float2 uv_MainTex;

};

sampler2D _MainTex;

void surf(Input IN, inout SurfaceOutput o) {

o.Emission = tex2D(_MainTex, IN.uv_MainTex).rgb;

o.Alpha = 1;

}

ENDCG

}

}

Properties{

_MainTex("Color (RGB)", 2D) = "white"

}

SubShader{

Tags{ "Queue" = "Transparent" "RenderType" = "Transparent" }

CGPROGRAM

#pragma surface surf NoLighting alpha

fixed4 LightingNoLighting(SurfaceOutput s, fixed3 lightDir, fixed atten) {

fixed4 c;

c.rgb = s.Albedo;

c.a = s.Alpha;

return c;

}

struct Input {

float2 uv_MainTex;

};

sampler2D _MainTex;

void surf(Input IN, inout SurfaceOutput o) {

o.Emission = tex2D(_MainTex, IN.uv_MainTex).rgb;

o.Alpha = 1;

}

ENDCG

}

}

So what's going on with this shader?

The first thing to notice is the #pragma command. This is basically called on every pixel that passes through this shader. So we've created a surface shader, each pixel is passed through the "surf" (surface render) function, the "nolighting" (lighting render) function, and the parameter "alpha" tells the shader that not all pixels are fully opaque (apparently, this can make a difference to the drawing order of the object, but we've taken care of this with our "tags" line).

Our nolighting function simply says "irrespective of the surface type or lighting conditions, each pixel should retain its own RGB and Alpha values - nothing in this shader will affect anything in the original texture".

A shader which applies specific lighting effects would have much more going on inside this function. But we don't want any lighting effects to be applied, so we simply return whatever we're sent through this function.

The other function of note is the "surf" (surface render) function. This accepts two parameters, an input texture and a surface output object. This function is slightly different to most shaders in that we don't set the surface texture (Albedo) on our output surface, but the "emission" property.

Think of it like this - we're not lighting a texture that's "printed" onto the plane - we're treating the plane like a television; even with no external lighting, your telly displays an image - the light is emitted from the actual TV screen - it's not dependent on external lighting being reflected off it. This is the same for our plane in our Unity project. Rather than set the "texture" of the plane surface, to match the pixels of the input texture, we're emitting those same pixels from the plane surface.

This is why we have the line

o.Emission = tex2D(_MainTex, IN.uv_MainTex).rgb;

instead of

o.Albedo = tex2D(_MainTex, IN.uv_MainTex).rgb;

If you drop your video onto a plane and add the shader (above), you should see the video being drawn as an unlit object (unaffected by external lighting) with no shadows (since the light is emitting from the plane, not being reflected off it). Perfect! We're written our first "no-light" shader!

Now the trick is to apply the alpha from the alpha material to the shader being used to play the video. That's relatively simple, but needs a bit of a careful editing to our original shader:

Shader "Custom/alpha1" {

Properties{

_MainTex("Color (RGB)", 2D) = "white"

_AlphaTex("Color (RGB)", 2D) = "white"

}

SubShader{

Tags{ "Queue" = "Transparent" "RenderType" = "Transparent" }

CGPROGRAM

#pragma surface surf NoLighting alpha

fixed4 LightingNoLighting(SurfaceOutput s, fixed3 lightDir, fixed atten) {

fixed4 c;

c.rgb = s.Albedo;

c.a = s.Alpha;

return c;

}

struct Input {

float2 uv_MainTex;

float2 uv_AlphaTex;

};

sampler2D _MainTex;

sampler2D _AlphaTex;

void surf(Input IN, inout SurfaceOutput o) {

o.Albedo = tex2D(_MainTex, IN.uv_MainTex).rgb;

o.Alpha = tex2D(_AlphaTex, IN.uv_AlphaTex).rgb;

}

ENDCG

}

}

Properties{

_MainTex("Color (RGB)", 2D) = "white"

_AlphaTex("Color (RGB)", 2D) = "white"

}

SubShader{

Tags{ "Queue" = "Transparent" "RenderType" = "Transparent" }

CGPROGRAM

#pragma surface surf NoLighting alpha

fixed4 LightingNoLighting(SurfaceOutput s, fixed3 lightDir, fixed atten) {

fixed4 c;

c.rgb = s.Albedo;

c.a = s.Alpha;

return c;

}

struct Input {

float2 uv_MainTex;

float2 uv_AlphaTex;

};

sampler2D _MainTex;

sampler2D _AlphaTex;

void surf(Input IN, inout SurfaceOutput o) {

o.Albedo = tex2D(_MainTex, IN.uv_MainTex).rgb;

o.Alpha = tex2D(_AlphaTex, IN.uv_AlphaTex).rgb;

}

ENDCG

}

}

The main changes here are:

Properties now include two materials/textures: the texture for the video to play, and a texture for the alpha mask. The input structure now includes both the texture to play and the alpha texture and the final line in our "surf" (surface render) function has changed:

Instead of setting the alpha (transparency) of every pixel to one (fully opaque) we look at the pixel in the alpha texture and use this to set the transparency of the pixel. Now, instead of each pixel being fully opaque, a white pixel in the alpha texture represents fully opaque, a black pixel represents fully transparent, and a grey shade, somewhere inbetween, indicates a semi-transparent pixel.

We also need to ensure that the play_video.cs script is applied to both planes and untick the "mesh render" option in the alpha plane (so that it does not get drawn to the screen when the game is played).

Hitting play now and we see our video footage playing, with an alpha mask removing all of the green pixels.

Well. Sort of.

After the video has looped a couple of times, it becomes obvious that the two movies are slowly drifting out of sync. After 20 seconds or more, the lag between the movies has become quite noticeable.

It looks like we're almost there - except it needs a bit of tweaking. Having our video and alpha footage on two separate movie clips is obviously causing problems (and probably putting quite a load on our GPU). What we need is a way of ensuring that the alpha and movie footage is always perfectly in sync.

What we need is to place the video and alpha footage in the same movie file!

After a bit of fiddling about with our two movies in After Effects and that's exactly what we've got....

Here's the code for the updated shader:

Shader "Custom/alpha1" {

Properties{

_MainTex("Color (RGB)", 2D) = "white"

}

SubShader{

Tags{ "Queue" = "Transparent" "RenderType" = "Transparent" }

CGPROGRAM

#pragma surface surf NoLighting alpha

fixed4 LightingNoLighting(SurfaceOutput s, fixed3 lightDir, fixed atten) {

fixed4 c;

c.rgb = s.Albedo;

c.a = s.Alpha;

return c;

}

struct Input {

float2 uv_MainTex;

};

sampler2D _MainTex;

void surf(Input IN, inout SurfaceOutput o) {

o.Emission = tex2D(_MainTex, IN.uv_MainTex).rgb;

o.Alpha = tex2D(_MainTex, float2(IN.uv_MainTex.x, IN.uv_MainTex.y-0.5)).rgb;

}

ENDCG

}

}

Properties{

_MainTex("Color (RGB)", 2D) = "white"

}

SubShader{

Tags{ "Queue" = "Transparent" "RenderType" = "Transparent" }

CGPROGRAM

#pragma surface surf NoLighting alpha

fixed4 LightingNoLighting(SurfaceOutput s, fixed3 lightDir, fixed atten) {

fixed4 c;

c.rgb = s.Albedo;

c.a = s.Alpha;

return c;

}

struct Input {

float2 uv_MainTex;

};

sampler2D _MainTex;

void surf(Input IN, inout SurfaceOutput o) {

o.Emission = tex2D(_MainTex, IN.uv_MainTex).rgb;

o.Alpha = tex2D(_MainTex, float2(IN.uv_MainTex.x, IN.uv_MainTex.y-0.5)).rgb;

}

ENDCG

}

}

Now we're almost there.

Gone are the nasty out-of-sync issues, and the alpha mask applies perfectly on every frame of the video. Underneath the video we can still see our alpha mask, wherever the video footage has been drawn. We've decided that this is because if no more mask exists, the shader uses the previous "line" of mask and continues to draw in the same place as the previous "scan line".

This could easily be fixed by drawing a single horizontal black line along the bottom of our alpha mask footage (so the final scan line of the mask is 100% transparent, along its full length, and this would be applied to every pixel below our main character).

Another approach might be a simple "if" statement:

if(IN.uv_MainTex.y < 0.5){

o.Alpha=0;

}else{

o.Alpha = tex2D(_MainTex, float2(IN.uv_MainTex.x, IN.uv_MainTex.y-0.5)).rgb;

}

o.Alpha=0;

}else{

o.Alpha = tex2D(_MainTex, float2(IN.uv_MainTex.x, IN.uv_MainTex.y-0.5)).rgb;

}

which yields the following result:

Well, it looks like we're nearly there.

There's still an occasional slight tinge around the character, particularly where there's a lot of fast motion (or motion blur) in the footage. If you look closely under the arm supporting the gun in the image above, you can see a slight green tinge. But in the main, it's pretty good.

There are a couple of ways of getting rid of this extra green:

The most obvious one is to simply remove the green from the video footage. Since we're no longer using this colour as a runtime chroma-key, there's no need for the background to be green - it could be black, or white, or some grey shade, somewhere inbetween (grey is less conspicuous as an outline colour than either harsh black or harsh white, depending on the type of background to be displayed immediately behind the video footage).

The other way of removing this one-pixel wide halo is to reduce the alpha mask by three pixels, then "blur" it back out by two pixels. This creates a much softer edge all the way around our main character (helping them to blend in with any background image) and removes the final pixel all the way around the alpha mask.

We had settled on a combination of both of these techniques when Scropp came up with the "proper" way to fix it: "clamp green to red" rendering. What this basically means is that for each pixel, compare the green and red channels of the image, and if the green value exceeds the red, limit it to be not more than the red channel.

If you think about it, this means that any pure green colour will be effectively reduced back to black. But any very pale green tint will also be reduced a tiny bit. Pure white, however, would not be affected, since it has the same amount of red as it does green.

Any pure green colours get blended to black. Very light green shades are blended more to yellow (when mixing RGB light, equal parts red and green create yellow) or pale blue (a turquoise colour of equal parts blue and green with very little red would be blended more to a blue colour as the green element is reduced). But for the colour to be a very light shade, it has to have lots of red, green and blue in it, so the amount of colour adjusted is actually pretty small.

So our final shader code looks like this:

Shader "Custom/alpha1" {

Properties{

_MainTex("Color (RGB)", 2D) = "white"

}

SubShader{

Tags{ "Queue" = "Transparent" "RenderType" = "Transparent" }

CGPROGRAM

#pragma surface surf NoLighting alpha

fixed4 LightingNoLighting(SurfaceOutput s, fixed3 lightDir, fixed atten) {

fixed4 c;

c.rgb = s.Albedo;

c.a = s.Alpha;

return c;

}

struct Input {

float2 uv_MainTex;

};

sampler2D _MainTex;

if( tex2D(_MainTex, IN.uv_MainTex).g > tex2D(_MainTex, IN.uv_MainTex).r ){

o.Emission = tex2D(_MainTex, IN.uv_MainTex).r;

}else{

o.Emission = tex2D(_MainTex, IN.uv_MainTex).rgb;

}

if(IN.uv_MainTex.y < 0.5){

o.Alpha=0;

}else{

o.Alpha = tex2D(_MainTex, float2(IN.uv_MainTex.x, IN.uv_MainTex.y-0.5)).rgb;

}

ENDCG

}

}

Properties{

_MainTex("Color (RGB)", 2D) = "white"

}

SubShader{

Tags{ "Queue" = "Transparent" "RenderType" = "Transparent" }

CGPROGRAM

#pragma surface surf NoLighting alpha

fixed4 LightingNoLighting(SurfaceOutput s, fixed3 lightDir, fixed atten) {

fixed4 c;

c.rgb = s.Albedo;

c.a = s.Alpha;

return c;

}

struct Input {

float2 uv_MainTex;

};

sampler2D _MainTex;

if( tex2D(_MainTex, IN.uv_MainTex).g > tex2D(_MainTex, IN.uv_MainTex).r ){

o.Emission = tex2D(_MainTex, IN.uv_MainTex).r;

}else{

o.Emission = tex2D(_MainTex, IN.uv_MainTex).rgb;

}

if(IN.uv_MainTex.y < 0.5){

o.Alpha=0;

}else{

o.Alpha = tex2D(_MainTex, float2(IN.uv_MainTex.x, IN.uv_MainTex.y-0.5)).rgb;

}

ENDCG

}

}

And the result looks something like this:

Bang and the nasty green halo effect is gone!

The best bit about Stephen's approach is that as well as getting rid of the green spill that creates the green halo effect around our main character, it also helps reduce any obvious green areas on the skin and clothes, where the greenscreen might have been reflected onto it.

So there we have it - from three days ago, with no obvious way of being able to play a transparent video against a programmable background, to a custom Unity shader that does exactly the job for us! Things are starting to look quite promising for our zombie board/video game mashup....

This page has saved me more than once. Thank you so much

ReplyDelete