While each board section was created using an "industry standard" 8x6 1-inch-square grid, we did try out a number of scales for the boards.

We loved the idea of 15mm, because:

- more game on a physically smaller playing area

- miniatures are cheaper and quicker to paint

- laser-cut terrain is cheaper and easier to create

- small minatures fit easily into our 1" squares.

But 15mm is not without it's downsides:

a) there's a massive difference in sizes between manufacturers (some create real 15mm, some are as large as 18mm or more)

b) the miniatures can look a bit "lost" when placed with just one in each square, on a 1" grid.

Of course 28mm (and the more recent 32mm) is a great scale for tabletop skirmish games, because:

a) there's a massive range of miniatures already available

b) miniatures have lots of great-looking detail when painted

c) for small-to-medium sized games, the larger sizes look very impressive on the tabletop

But on the downside....

- individual miniatures are relatively expensive - building a large horde of zombies, for example, could get quite pricey

- painting all those lovely details takes time. Lots of time

- terrain looks great but can be quite bulky and take up a lot of space

- the board can look quite crowded when a number of playing pieces are placed closely together on a 1" grid.

Fortunately, we found a great halfway-house: 20mm minatures.

They have a lot of the benefits of both 15mm and 28mm/32mm scale miniatures:

- They have enough detail to stay interesting, but not so much that they take forever to paint

- They match nicely with other, modern-day terrain (small cars, 1/76th railway models etc)

- They're relatively cheap, even when buying in bulk

- They look very impressive when assembled in volume on the tabletop

- They are neither too large or too small for a 1" grid

We'd love to stick with 20mm for everything, but it does suffer one massive drawback as a scale for tabletop gaming: namely that there are very few decent suppliers out there, with a large enough range, to justify asking all the potential players of our game to invest in (yet another) scale.

Now we've really tried everything from approaching 20mm suppliers to investigating casting our own range of miniatures. But that's not really something we want to get tied up with! So we've got to make a decision on which off-the-shelf scale to use for our game.

Whichever scale we decide on, we're going to have to re-design our board game sections for future versions - either scale it up for 28mm/32mm, or scale it down for "true" 15mm.

Given that scale creep is only ever looking like it goes upwards (i.e. 28mm has become 32mm and is creeping towards 35mm and 15mm is often more like 18mm) we've decided to simply go with the range that currently has most miniatures available for it. Which means we're shifting over to 28mm/32mm.

In truth, we'd always had one eye on this as our preferred range anyway; it was the creating of the playing surfaces on a 1" grid that caused us to try other scales and sizes. The one thing we've stuck to, throughout all this, has been our 1" grid arrangement.

Unfortunately, it's this 1" grid arrangement that's held us back!

So we're losing the one thing we've stuck rigidly to since day one of this project, in order to claw back some of the benefits of using existing miniatures and playing pieces. Which leads to the next question - how big should our grid squares be?

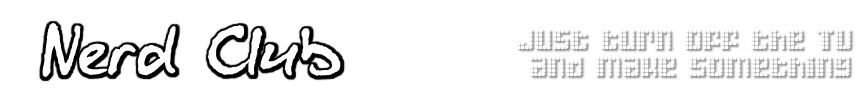

We printed a couple of different sized grids for comparison:

It's immediately obvious that, for 28mm/32mm miniatures, our 1" square grid is simply too small. The space marines in the background are touching and overlapping each other, and picking them up and moving them around is simply too fiddly to be practical. Our footballing players in the foreground are supposed to represent players in a rugby-style wrestling ruck (though where the ball is, who knows?!)

By increasing the grid size to 30mm, the space marines get a bit more breathing space, but when placed on the board along with some oversized miniatures (like the Mech robot for example) or when placed with scenery and walls also on the board, things could still get a bit crowded.

In an uncrowded, open board (such as a football field, for example) our two football players still look as though they are close enough to be engaged.

Lastly, we tried 35mm grid squares (actually, we did try 40mm but quickly decided that these were simply too big). At this size, there's plenty of room around our space marine characters, and our oversized miniatures (like the Mech robot, and for characters like GW termagaunts) fit inside the grid squares, even if three or four need to be placed in close proximity.

Our football players are starting to look like there's a bit of space between them now. If the grid squares were any larger they wouldn't look right representing two players locked in a physical tussle.

So there we have it. We've decided to "upscale" our playing board sections, to 35mm squares (instead of the current 1" or 25.4mm squares). In doing so, we'll leave all thoughts of smaller scale miniatures behind and focus on the most popular 28mm/32mm scale.

Sure, these individual miniatures can be relatively expensive, when compared to their 15mm/20mm counterparts. But there's also such a massive range of miniatures from a massive range of suppliers, the extra cost must surely be worth it, if only for the ability to source exactly the miniature you're looking for.

With all the recent focus on software development, it's been easy to forget about the physical game. Well, no so much "forget" as "put on the back-burner". At least with this decision made and put to bed, we can forget about crazy ideas about creating a range of custom miniatures, or trying to get miniatures made to match our Unity characters - after all, it'd be far cheaper to digitally sculpt an existing miniature character and include that in our game, than to try to create an entire range of niche, obscurely-sized pewter miniatures just to match the artwork in our Unity-based app!

If it means changing our 1" grid in order to access the massive range of skirmish-sized miniatures already out on the market, then that's what we'll do.